I came across WPTouch, a WordPress plugin/theme that optimizes a blogs appearance for fast loading on mobile devices such as the iPhone, Android and so on. It’s really trivial to install (just like any other plugin) and once done, browsing this site on my iPhone is really much nicer than viewing the full fledged desktop theme.

When is a Bad Plate Bad?

When running a high-throughput screen, one usually deals with hundreds or even thousands of plates. Due to the vagaries of experiments, some plates will not be ervy good. That is, the data will be of poor quality due to a variety of reasons. Usually we can evaluate various statistical quality metrics to asses which plates are good and which ones need to be redone. A common metric is the Z-factor which uses the positive and negative control wells. The problem is, that if one or two wells have a problem (say, no signal in the negative control) then the Z-factor will be very poor. Yet, the plate could be used if we just mask those bad wells.

Now, for our current screens (100 plates) manual inspection is boring but doable. As we move to genome-wide screens we need a better way to identify truly bad plates from plates that could be used. One approach is to move to other metrics – SSMD (defined here and applications to quality control discussed here) is regarded as more effective than Z-factor – and in fact it’s advisable to look at multiple metrics rather than depend on any single one.

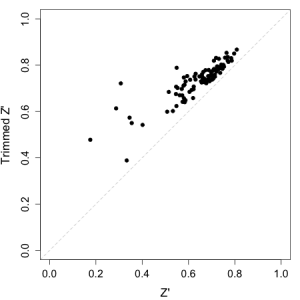

An alternative trick is to compare the Z-factor for a given plate to the trimmed Z-factor, which is evaluated using the trimmed mean and standard deviations. In our set up we trim 10% of the positive and negative control wells. For a plate that appears to be poor, due to one or two bad control wells, the trimmed Z-factor should be significantly higher than the original Z-factor. But for a plate in which, say the negative control wells all show poor signal, there should not be much of a difference between the two values. The analysis can be rapidly performed using a plot of the two values, as shown below. Given such a plot, we’d probably consider plates whose trimmed Z-factor are less than 0.5 and close to the diagonal. (Though for RNAi screens, Z’ = 0.5 might be too stringent).

From the figure below, just looking at Z-factor would have suggested 4 or 5 plates to redo. But when compared to the trimmed Z-factor, this comes down to a single plate. Of course, we’d look at other statistics as well, but it is a quick way to rapidly identify plates with truly poor Z-factors.

A GPL3 Oracle Cheminformatics Cartridge

Sometime back I had mentioned a new cheminformatics toolkit, Indigo. Recently, Dmitry from SciTouch let me know that they had also developed Bingo, an Oracle cartridge based on Indigo, to perform cheminformatics operations in the database. This expands the current ecosystem of Open Source database cartridges (PGChem, MyChem, OrChem) which pretty much covers all the main RDBMSs (Postgres, MyQSL and Oracle). SciTouch have also provided a live instance of their database and associated cartridge, so you can play with it without requiring a local Oracle install. (It’d be useful to provide some details of the hardware that the DB is running on, so that timing numbers get some context)

Slides from a Guest Lecture at Drexel University

On Thursay I joined Antony Williams as a guest lecturer in Jean Claude-Bradleys‘ class on chemical information retrieval at Drexel University. Using a combination of WebEx and Skype, we were able to give our presentations – seamlessly joining three different locations. Technology is great! Tony gave an excellent talk on citizen science and ChemSpider and I spoke about similarity and searching. Jean Claude has also put up an audio version.

Are Bioinformatics Results Too Good To Be True?

I came across an interesting paper by Ann Boulesteix where she discusses the problem of false positive results being reported in the bioinformatics literature. She highlights two underlying phenomena that lead to this issue – “fishing for significance” and “publication bias”.

The former phenomenon is characterized by researchers identifying datasets on which their method works better than others or where a new method is (unconciously) optimized for given set of datasets. Then there is also the issue of validation of new methodologies, where she notes

… fitting a prediction model and estimating its error rate using the same training data set yields a downwardly biased error estimate commonly termed as ”apparent error”. Validation on independent fresh data is an important component of all prediction studies…

Boulesteix also points out that true, prospective validation is not always possible since the data may not be easily accessible to even available. She also notes that some of these problems could be mitigated by authors being very clear about the limitations and dataset assumptions they make. As I have been reading the microarray literature recently to help me with RNAi screening data, I have seen the problem firsthand. There are hundreds of papers on normalization techniques and gene selection methods. And each one claims to be better than the others. But in most cases, the improvements seem incremental. Is the difference really significant? It’s not always clear.

I’ll also note that this same problem is also likely present in the cheminformatics literature. There are any papers which claim that their SVM (or some other algorithm) implementation does better than previous reports on modeling something or the other. Is a 5% improvement really that good? Is it significant? Luckily there are recent efforts, such as SAMPL and the solubility challenge to address these issues in various areas of cheminformatics. Also, there is a nice and very simple metric recently developed to compare different methods (focusing on rankings generated by virtual screening methods).

The issue of publication bias also plays a role in this problem – negative results are difficult to publish and hence a researcher will try and find a positive spin on results that may not even be significant. For example, a well designed methodology paper will be difficult to publish if it cannot be shown to be better than other methods. One could get around such a rejection by cherry picking datasets (even when noting that such a dataset is cherry picked, it limits the utility of the paper in my opinion), or by avoiding comparisons with certain other methods. So while a researcher may end up with a paper, it’s more CV padding than an actual improvement in the state of the art.

But as Boulesteix notes, “a negative aspect … may be counterbalanced by positive aspects“. Thus even though a method might not provide better accuracy than other methods, it might be better suited for specific situations or may provide a new insight into the underlying problem or even highlight open questions.

While the observations in this paper are not new, they are well articulated and highlight the dangers that can arise from a publish-or-perish and positive-results-only system.