Over the last few weeks I’ve been getting up to speed on RNAi screening (see Lord et al for a nice overview) and one of the key features in this approach is to reliably select hits for followup. In many ways it is similar to hit selection in small molecule HTS but also employs techniques from the analysis of microarray data.

Very broadly, there are two types of selection methods: statistical and knowledge based. In general, one employs a statistical method first, possibly followed by triaging the initial list using a knowledge based method. As with small molecule HTS, true hits (true positives or TP) in general are the outliers, though it’s possible that assay artifacts will also look like true hits and these would be false positives (FP). But in general, the outliers are easy and the real challenge is with identifying moderately active siRNA’s (or compounds) as actual hits.

One can further divide statistical methods into ranking procedures or thresholding procedures. In the former one ranks the siRNA‘s by some metric and selects as many as can be followed up using available resources. In the latter, one identifies a threshold, above which any siRNA is regarded as a hit. One of the key features of many statistical methods is that they provide bounds on the FP and FN (false negative) rates. This is especially important with thresholding methods.

But what about siRNA‘s that lie just below the threshold? Should one ignore them? Is it possible to further investigate whether they might actually be hits? This is where a knowledge based approach can provide a second level of confirmation for false negatives or positives. This post summarizes recent work on hit selection in RNAi screens.

Statistical methods

Many statistical methods are similar to or derived from approaches employed in HTS experiments for small molecules. An excellent overview of statistical methods in HTS analysis is given by Malo et al and covers both assay quality metrics as well as hit selection methods.

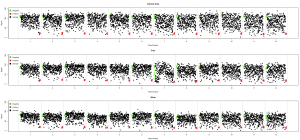

One of the most common methods is to use a threshold of \(\mu \pm k \sigma\), where \(\mu\) and \(\sigma\) are the mean and standard deviation of the sample wells respectively and k is usually taken to be 3. This is also known as the z-score method, and assumes a normal distribution of signal values. However, in RNAi screens it’s not uncommon to have a number of extreme outliers. Such a situation would skew the mean based threshold to larger values, leading to fewer prospective hits being identified.

Instead, Zhang et al (as well as Chung et al) suggest the use of the \(median \pm 3 \textrm{MAD}\) method, where MAD is the mean absolute deviation and is given by

\(\textrm{MAD} = 1.4826\, median( | x_i – median(x) | ) \)

where \(x_i\) is the signal from well $\latex i$ and \(x\) represents all the sample wells. The key feature of this approach is that it is more robust to outliers and violations of the assumption that the signal data is normally distributed. Chung et al present a nice simulation study, highlighting the effectiveness of MAD based selection over mean based.

However, Zhang et al also note that the MAD method is not robust to skewed distributions. To address this they suggest a quartile based threshold method. For the sample data, one calculates the first, second and third quartiles (\(Q_1, Q_2, Q_3\)) and generates the lower and upper thresholds for hit selection as the smallest observed value just above \(Q_1 – c IQR\) and the largest observed value just below \(Q_3 – c IQR\) respectively. In both cases, \(IQR = Q_3 – Q_1\). A key feature of this method is the choice of \(c\), and is based on the desired targeted error rate (based on a normal distribution). Zhang et al show that one can choose \(c\) such that the resultant error rate is equivalent to that obtained from a mean or median-based thresholding scheme, for a given \(k\). Thus for \(k = 3\) in those methods, the quartile method would set \(c = 1.7239\).

In contrast to the above methods, which define a threshold and automatically select siRNA’s above the threshold as hits, one can order the wells using a metric and then simply select the top \(n\) wells for followup, where \(n\) is dictated by the resources available for followup. In this vein, Wiles et al discuss a variety of ranking metrics methods for RNAi screens. Some of the methods they discuss include simple background substraction quantile normalization and so on IQR normalization. Surprisingly, in their analysis, ranking of siRNA’s based on background subtraction outperformed IQR normalization.

Zhang et al (the Merck people have been busy!) described another ranking procedure based on the Strictly Standardized Mean Difference (SSMD) between signals from individual siRNA’s and a negative control. While conceptually similar to simple schemes such as percentage inhibition, this method is reported to be more robust to variability in the sample and control groups and also has a probabilistic interpretation. The latter aspect allowed the authors to develop a thresholding scheme, based on controlling the false positive and false negative rates.

Another approach described by Konig et al makes use of the fact that in RNAi screens, a given gene is targeted by 2 to 4 different siRNA’s. In contrast, none of the above methods, consider this aspect of an RNAi screen. Their method, termed RSA (redundant siRNA activity) analysis, initially ranks all wells in an assay based on their signals. Next, they consider the rank distribution of all siRNA’s targeting a given gene and determine a p-value, which indicates whether all the siRNA’s targeting a given gene have low ranks. They then rerank all wells based on their p-values , followed by their individual signals. The net result of this is that, even though a gene might have 4 wells (i.e., siRNA’s) exhibiting moderate activity (and so might be just below the threshold in a mean or MAD based analysis), it will be “weighted” more heavily than a gene with, say, just one highly active siRNA. Their experiments and analysis indicate that this is a useful method and their implementations (C#, Perl, R) are available. A nice side effect of this method is that they are able suggest an optimal (in terms of efficacy) number of redundant siRNA’s per gene.

Platewise or experimentwise?

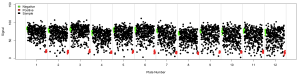

One aspect that I haven’t mentioned is whether these analyses should be performed in a platewise manner or across all the plates in an experiment (i.e. experimentwise manner). Given that an RNAi screen can include a large number of plates, it’s possible that plate specific effects might skew an anaysis that considers all plates together. Thus platewise analysis can handle different systematic errors within plates. At the same time, a platwise analysis would be ignorant of any clustering that may be present amongst the hits, which would be identifiable is one performed the analysis in an experimentwise manner. Zhang et al suggest the following scheme, based on their observations that platewise analysis tends to have a higher probability of identifying true hits.

- Select a thresholding method

- Apply the method in an experimentwise manner on the normalized data, to see if hit clusters are present

- If such clusters are present, repeat the above step on un-normalized data to check whether clustering is an artifact

- If no clusters are observed, perform the analysis in a platewise manner and select hits from each plate

While a useful protocol, Zhang et al describe a Bayesian approach, derived from a similar method for microarray data (Newton et al). One of the key features of this approach is that it incorporates aspects of a platewise and experimentwise analysis in a single framework, obviating the need to choose one or the other.

Knowledge based methods

The statistics based methods work on the basis of relatively large samples and employ a variety of distributional assumptions. They’re certainly useful as a first pass filter, but various questions remain: Should we totally ignore wells that lie just below a threshold? Is there a way to check for false negatives? False positives?

To address these questions, before running a follow up screen, one can make use of external knowledge. More specifically, genes do not exist in isolation. Rather, they can be considered in terms of networks. Indeed, studies of PPI‘s (Chuang et al) and disease genes (Kohler et al) have suggested a guilt by association principle – genes that are close together in a network are more likely to exhibit similar functions or lead to similar phenotypes.

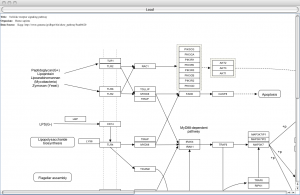

Wang et al employed this principle to characterize the genes targeted by siRNA’s in a screen, by constructing an interaction network (based on STRING data) and analyzing the connectivity of the genes from the screen in this context. Their observation was that genes corresponding to hits from the RNAi screen exhibited a higher degree of connectivity, compared to random networks. While an interesting approach, some of it does seem a little ad hoc. Given the fact that it depends on PPI data, the networks constructed from screening results may be incomplete, if the PPI database does not contain interactions for the genes in question. In addition, while their network based scoring method seems to correlate somewhat with followup results, it’s not very consistent. However, the approach makes sense and correctly catches false negatives in their test cases. Their R code for the network based scoring system is available.

Interestingly, I haven’t found many other studies of such knowledge based hit selection methods. While networks are one way to identify related genes, other methods could be considered and it would be interesting to see how they compare.