Recently I came across a fantastic article that explored how far ahead Google Maps is compared to Apple Maps, focusing in particular on Areas of Interest (AOI), and how this is achieved with Googles competencies in massive data and massive computation, resulting in a moat. The conclusion is that

Google has gathered so much data, in so many areas, that it’s now crunching it together and creating features that Apple can’t make—surrounding Google Maps with a moat of time

But the key point that caught my eye was the idea that Google Maps sophistication is a byproduct of byproducts. As pointed out, AOI’s are a byproduct of buildings (a byproduct of satellite imagery) and places (a byproduct of Street View) and thus AOI’s are byproducts of byproducts.

This observation led me to thinking of how it could apply in a biomedical setting. In other words, given disparate biomedical data types, what new data types can be generated from them, and using those derived data types what further data types could be derived again? (“data type” may not be the right term to use here, and “entity” may be a more suitable one).

One interpretation of this idea are integrative resources, where disparate (but related) data types are connected to each other in a single store, allowing one to (hopefully) make non-obvious links between entities that explain a higher level system or phenomenon. Recent examples include Pharos and MARRVEL. However, these don’t really fit the concept of byproducts of byproducts as neither of these resources actually generate new data from pre-existing data, at least by themselves.

So are there better examples? One that comes to mind is the protein folding problem. While one could fold proteins de novo, it’s a little easier if constraints are provided. Thus we have constraints derived from NMR and AA coevolution. As a result we can view predicted protein structures as a byproduct of NMR constraints (a byproduct of structure determination) and a byproduct of AA co-evolution data (a byproduct of gene sequencing). An example of this is Tang et al, 2015.

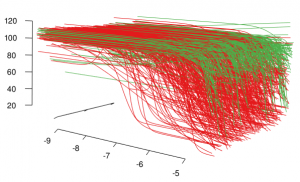

Another one that comes to mind are inferred gene (or signalling, metabolic etc) networks, which go from say, gene expression data to a network of genes. But going by the Google Maps analogy above, the gene network is the first level byproduct. One could image a computation that processes a set of (inferred) gene networks to generate higher level structures (say, spatial localization or differentiation). But this is a bit more fuzzier than the protein structure problem

Of course, this starts to break down when we take into account errors in the individual components. Thus sequencing errors can introduce errors in the coevolution data, which can get carried over into the protein structure. This isn’t inevitable – but it does require validation and possibly curation. And in many case, large, correlated datasets can allow one to account for errors (or work around them).

This is mainly speculation on my part, but it seems interesting to try and think of how one can combine disparate data types to generate new ones, and repeat this process to come up with something new that was not available (or not obvious) from the initial data types.