At work, a colleague gave an interesting presentation on characterizing associations between continuous and categorical variables. I expect that I will be facing this issue in some upcoming work so was doing a little reading and made some notes for myself.

Given a continuous variable Y and a categorical variable G, is the distribution of Y independent of the levels of G? One can address this using parametric or non-parametric methods. Due to the superior power of parametric methods we start by considering them and only if we completely fail to satisfy the distributional assumptions of such methods, do we move to the non-parametric methods.

A simple approach is to convert G to an indicator variable matrix, such that each level of G becomes a binary vector, and then use this matrix and Y to fit a linear model. One can then perform an ANOVA on the fit to check whether Y is independent of the groups. This approach assumes that Y is normally distributed, and that variances are equal amongst the groups.

A simple example in R is as follows. We first create a dataset in which there is no difference in the mean or variance across the groups, one in which the means differ and one in which the variances differ

1 2 3 4 5 6 7 8 9 10 11 | G <- factor(sample(c('A','T','C','G'), 100, TRUE, c(0.2,0.2,0.2,0.2))) Y1 <- rnorm(100, mean=10, sd=3) Y2 <- Y1 Y2[which(G=='G')] <- sample(rnorm(length(which(G=='G')),mean=4,sd=3)) Y3 <- Y1 Y3[which(G=='G')] <- sample(rnorm(length(which(G=='G')),mean=10,sd=6)) |

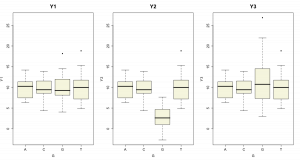

A summary of the Y’s grouped by G are given below.

We now run the ANOVA, using the first dataset.

1 2 | fit <- lm(Y1 ~ G, data=data.frame(Y1,G)) anova(fit) |

Running this gives us

1 2 3 4 5 | Analysis of Variance Table Response: Y1 Df Sum Sq Mean Sq F value Pr(>F) G 3 0.74 0.25 0.0304 0.9928 Residuals 96 777.19 8.10 |

If we consider the 0.05 significance level, we would not reject the null hpothesis that Y is independent of G. If we now rerun the ANOVA using the second dataset

1 2 | fit <- lm(Y2 ~ G, data=data.frame(Y2,G)) anova(fit) |

we get

1 2 3 4 5 | Analysis of Variance Table Response: Y2 Df Sum Sq Mean Sq F value Pr(>F) G 3 1131.65 377.22 49.321 < 2.2e-16 *** Residuals 96 734.22 7.65 |

which suggests that we reject the null hypothesis and accept the alternative that there is the Y’s are not independent of the groups (which is true by design).

Furthermore, it really only indicates whether there is a dependence between the groups and the means of the group members – it doesn’t tell us anything about lack of independence in terms of scale parameters. This is not surprising since the linear model is defined to have a constant variance. Thus, if we want to investigate dependence of the variance on groups, we should look at distributions of residuals or other approaches to identifying heteroscedacity (such as Levenes test).

In case we cannot satisfy the assumptions (even after investigating possible transformations of the data) required for ANOVA, we can move to parametric methods, which are easy to run, but have a lower power. For this problem, the Kruskal-Wallis test and the Fligner-Killeen test come in handy. These methods are in the base stats package but also come with the coin package which is handy for running a variety of independence tests.

The Kruskal-Wallis test makes no assumptions about normality, but does assume that the distributions amongst the levels of G are of the same shape and have the same variance (and so, the differences are due to differences in the means). The Fligner-Killeen test also avoids the normality assumption bu in this case, has the null hypothesis that the variances in the groups are the same. Thus ideally, we’d run the Kruskal-Wallis first and if we could not reject the null hypothesis we then investigate whether there is a difference in the variances across the groups by the Fligner-Killeen tests.

Some examples highlight the analysis. First, we consider the dataset that is independent of the groups (Y1):

1 2 3 4 5 6 | > kruskal_test(Y1 ~ G, data=data.frame(Y1,G)) Asymptotic Kruskal-Wallis Test data: Y1 by G (A, C, G, T) chi-squared = 0.0628, df = 3, p-value = 0.9959 |

which indicates that we fail to reject the null hypothesis that the distribution of Y1 is independent of the levels of G. We then consider the dataset where the means are dependent on the groups

1 2 3 4 5 6 | > kruskal_test(Y2 ~ G, data=data.frame(Y2,G)) Asymptotic Kruskal-Wallis Test data: Y2 by G (A, C, G, T) chi-squared = 57.3197, df = 3, p-value = 2.196e-12 |

which indicates the we can reject the null hypothesis. However, note that for Y3, where the means are independent of the groups but the variance differ, the Kruskal-Wallis test is not useful:

1 2 3 4 5 6 | > kruskal_test(Y3 ~ G, data=data.frame(Y3,G)) Asymptotic Kruskal-Wallis Test data: Y3 by G (A, C, G, T) chi-squared = 1.5399, df = 3, p-value = 0.673 |

On the other hand, if we run the Fligner-Killeen test on these three datasets, we correctly identify Y3 as having its variance dependent on G

1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 17 18 19 20 | > fligner_test(Y1 ~ G, data=data.frame(Y1,G)) Asymptotic Fligner-Killeen Test data: Y1 by G (A, C, G, T) chi-squared = 2.9914, df = 3, p-value = 0.3929 > fligner_test(Y2 ~ G, data=data.frame(Y2,G)) Asymptotic Fligner-Killeen Test data: Y2 by G (A, C, G, T) chi-squared = 2.8202, df = 3, p-value = 0.4202 > fligner_test(Y3 ~ G, data=data.frame(Y3,G)) Asymptotic Fligner-Killeen Test data: Y3 by G (A, C, G, T) chi-squared = 15.3493, df = 3, p-value = 0.001541 |