Recently I came across a NIPS2015 paper from Vartak et al that describes a system (APIs + visual frontend) to support the iterative model building process. The problem they are addressing is common one in most machine learning settings – building multiple models (different type) using various features and identifying one or more optimal models to take into production. As they correctly point out, most tools such as scikit-learn, SparkML, etc. focus on providing methods and interfaces to build a single model – it’s up to the user to manage the multiple models, keep track of their performance metrics.

My first reaction was, “why?”. Part of this stems from my use of the R environment which allows me to build up a infrastructure for building multiple models (e.g., caret, e1701), storing them (list of model objects, RData binary files or even pmml) and subsequent comparison and summarization. Naturally, this is specific to me (so not really a general solution) and essentially a series of R commands – I don’t have the ability to monitor model building progress and simultaneously inspect models that have been built.

In that sense, the authors statement,

For the most part, exploration of models takes place sequentially. Data scientists must write (repeated) imperative code to explore models one after another.

is correct (though maybe, said data scientists should improve their programming skills?). So a system that allows one to organize an exploration of model space could be useful. However, other statements such as

- Without a history of previously trained models, each model must be trained from scratch, wasting computation and increasing response times.

- In the absence of history, comparison between models becomes challenging and requires the data scientist to write special-purpose code.

seem to be relevant only when you are not already working in an environment that supports model objects and their associated infrastructure. In my workflow, the list of model object represents my history!

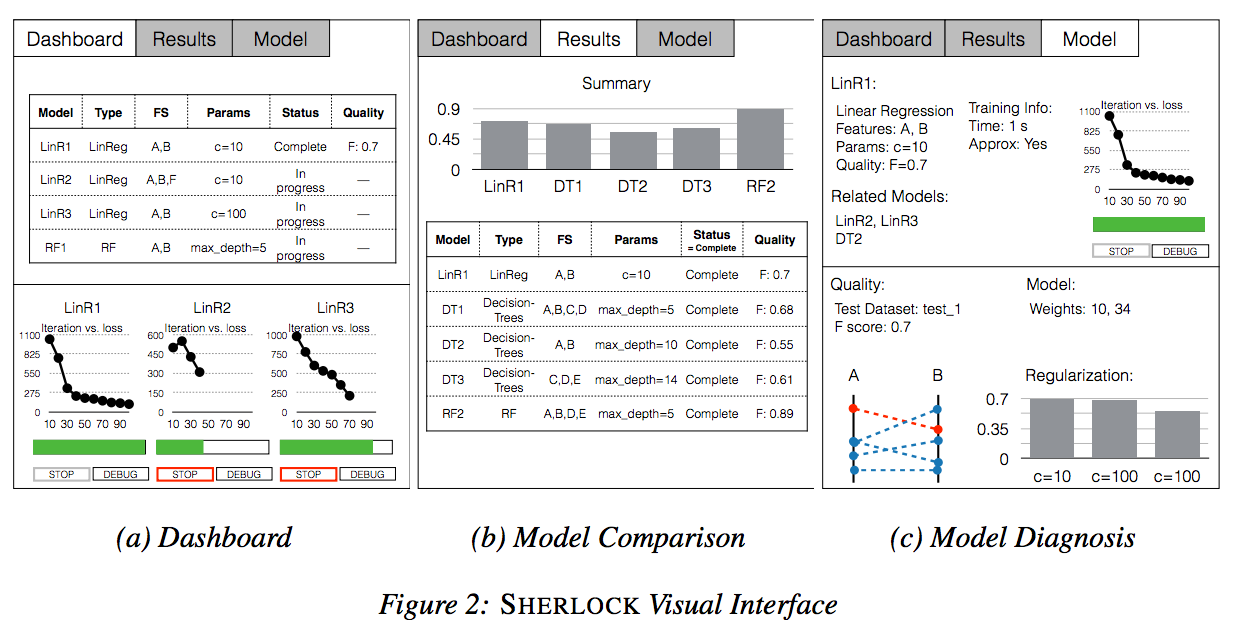

Their proposed system called SHERLOCK is built on top of SparkML and provides an API to model building, a database for model storage and a graphical interface (see above) to all of this. While I’m not convinced of some of the design goals (e.g., training variations of models based on previously trained models (e.g., with different feature sets) – wouldn’t you need to retrain the model from scratch if you choose a new feature set?), it’ll be interesting to see how this develops. Certainly, the UI will be key – since it’s pretty easy to generate a multitude of models with a multitude of features, but in the end a human needs to make sense of it.

On a separate note, this sounds like something RStudio could take a stab at.

It’s very-very valid points! Model reuse and overall infrastructure should be in place and more importantly organization-wise. I build models on a regular basis, my colleagues also, but to exchange those models an environment is needed. Some prefer R, some python, some descriptor X, other’s descriptor Y. Person Z build model for solubility 1 year ago, now more data arrived – should we update the model or rebuild it? etc….

Yes, indeed – especially in orgs where multiple people use different tools/platforms (though the proposed prototype is still for a single platform). But I like how this could be a way to *organize* model space exploration

Probably relevant here – Hidden Technical Debt in Machine Learning Systems: http://papers.nips.cc/paper/5656-hidden-technical-debt-in-machine-learning-systems.pdf